Cassava's AI Factory build - ephemeral or phenomenal?

Cassava's Africa Data Centres to put at least $720-m into "AI Factories" in Africa

Last week saw great fanfare and largely copy/paste coverage in business and tech media of the Cassava press release. It was big news: the dawn of a new AI age in Africa. Africa Data Centres in South Africa would be given a major upgrade, and then Egypt, Kenya, Morocco and Nigeria, with $720-m of Nvidia GPUs, to create what was breathlessly called the rollout of “AI Factories”.

What’s an AI Factory? The glib definition is: “Unlike traditional data centres, AI factories do more than store and process data — they manufacture intelligence at scale, transforming raw data into real-time insights.”

OK, clear as mud.

There’s a Wikipedia page: “The AI factory is an AI-centred decision-making engine… optimises day-to-day operations by relegating smaller‑scale decisions to machine learning algorithms. The factory is structured around four core elements: the data pipeline, algorithm development, the experimentation platform, and the software infrastructure. By design, the AI factory can run in a virtuous cycle: the more data it receives, the better its algorithms become, improving its output, and attracting more users, which generates even more data.”

The term seems to have been coined in a 2020 book, and then vanished for years, to be revived and relentlessly touted by Nvidia CEO Jensen Huang (PC Mag here, Forbes here) earlier this year.

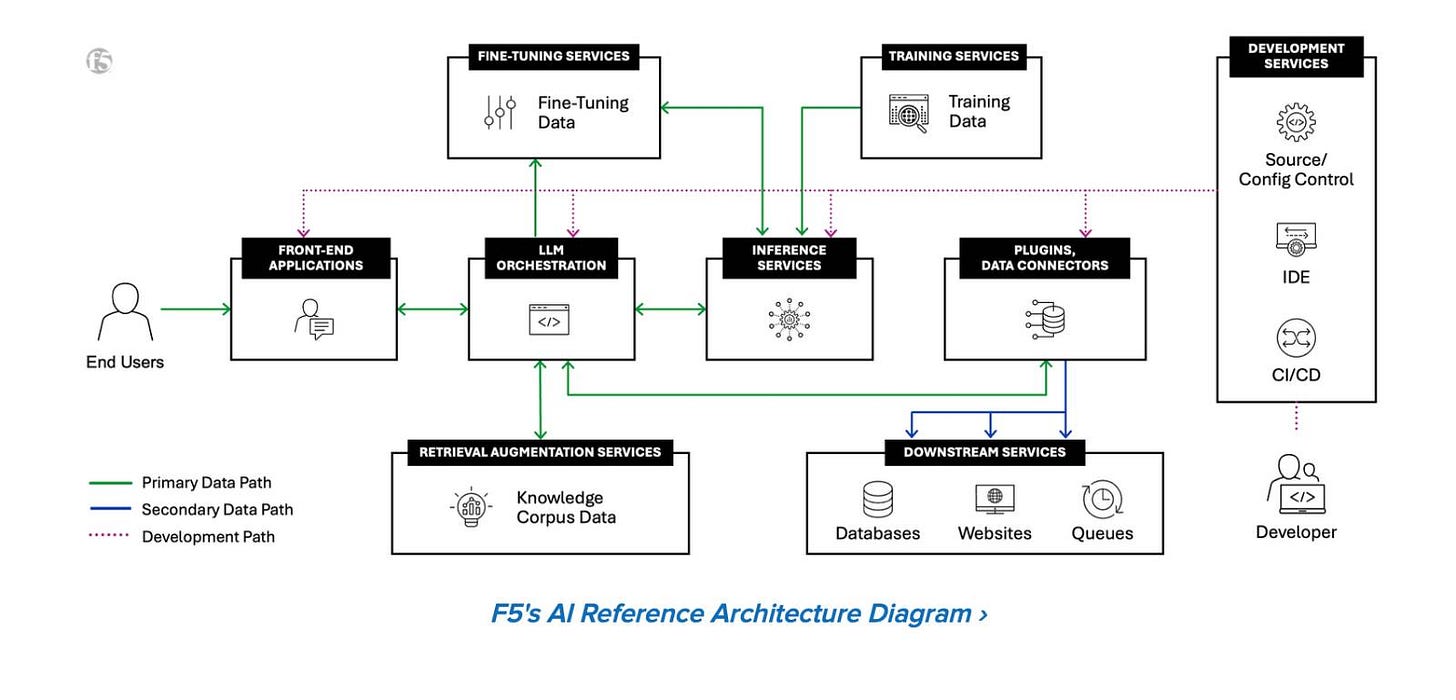

Nvidia’s definition: “A traditional data centre handles diverse workloads and is built for general-purpose computing, AI factories are optimised to create value from AI. They orchestrate the entire AI lifecycle — from data ingestion to training, fine-tuning and, most critically, high-volume inference.”

In Nvidaispeak, intelligence is measured by AI token throughput — “the real-time predictions that drive decisions, automation and entirely new services”.

OK, got it… it’s a GPU-rich DC with a robust application environment to host training, viz, data science UI and massive high performance databases (note: In Googlespeak GPUs are TPUs, Tensor rather than Graphic Processing Units). Along with the compute and orchestration/devops support systems, a useful AI factory also needs to have a tonne of really good quality data already in the DC — for speed, but also because it costs lots of money to move large amounts of data around.

For the more technical, this is the reference architecture from F5 (network security appliance company):

SO WHAT? – For many of a more cynical bent the Cassava announcement felt like AI-washing … a regular old hardware upgrade cycle that was spun as being AI-tabulous and earth shattering. If the execution is exemplary however, then this initiative really could be a game-changer.

Here are some key points about Cassava’s announcement:

$720-million to be spent, some of Cassava’s own capital injection, some debt (not much detail available yet).

Timelines are ambitious — to have the first 3,000 GPUs commissioned by June in SA, with Egypt, Kenya, Nigeria, Morocco rolled out before year end for a total of 12,000 GPUs (of as yet unknown specification)

This puts compute capacity directly into the hands of African technology developers, where as noted previously less than 5% of the world’s AI-optimised compute capacity is installed.

This significantly changes the balance of power of the global hyperscalers that are trying to drive use of global (i.e. largely US-based) Cloud compute.

In effect, Africa could benefit from what is looking to become a proxy war between global hyperscalers driving Cloud (Google just released its new Ironwood TPU processors which 10X its previous generation in performance), and hardware manufacturers wanting to sell tin (and services).

The long term cost vs benefit of having DCs with powerful AI capabilities in Africa is still unknown, and cool heads should prevail lest we get swept up in the irrational exuberance. Energy demands from AI datacentres will quadruple by 2030 says the International Energy Agency (IEA), with one DC today consuming 100,000 households worth of energy, and some under construction requiring 20 times more. Also, done badly, AI could suck water from some of the world’s driest areas, an investigation by the Guardian revealed.

On the up-side: potentially, harnessing AI could balance the energy equation by making various activities more efficient

And strategically, having AI DCs in Africa makes the continent less dependant on the Global North and more self sufficient

ZOOM OUT – We now wait for more concrete details of what Cassava has planned, but with some hyperscalers pulling in their horns on their own DC builds, and some reality setting in on what AI can and cannot do, local compute infrastructure is almost certainly a Good Thing.

Not only does it create a valuable resource for ongoing exploitation, but also creates a skilled, experienced local workforce that can then maintain, optimise and extend it.

[Written and edited with no assistance of AI]